Linear algebra

is a the cartesian product of the group (from group theory) of real numbers, times.

Vector Space

A vector space is a set with with binary operations where is between elements (vectors) of and is between elements of and elements (scalars) of , such that :

- is an Abelian Group.

-

-

-

- .

Dimension

If for a vectors space , the group , then is the dimension of the vector space.

Thus the set of all matrices in over addition and scalar multiplication is also a vector space of dimension . (In case you still haven’t got it, we are talking about image data) .

Subspaces

A vector subspace is just a subgroup of a vector space.

Affine spaces

For any subspace , any coset of the supspace is called an affine space.

Affine space containing affine space

For an affine space to be a subset of another affine space , we require for some .

Thus we arrive at .

This condition is equivalent to the bases of being in .

Solving a system of equations with rectangular matrices

Consider the equation . To solve this, find a specific solution and as many linearly independent solutions to as possible. Then the general solution is

To find quickly, first do Guassian elimination to get and then express the collumn vectors with multiple non zero entries, say the collumn as a linear combination of collumns with only 1 (and 0s) as entries. Then just put the coefficients as coordinates for and put as the coordinate.

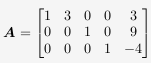

Example : Suppose after row operations, we have arrived at this matrix :

Then using our method for the 2nd collumn, we get this vector :

And doing it for the 5th column vector, we get :

This way you can solve the equation when is a horizontal matrix () . When it’s a vertical matrix, we simply chop off the lower rows after Guassian elimination. Another way to solve a system like this is to apply the Moore-Penrose pseudo-inverse on both sides, given by . Be warned though..computing is not easy.

This solution is also the one we get when we do the least squares approximation.

Least squares

Consider to be a list of row vectors which are really points in some dimensional space and to be a list of values closed to ones assigned to these points by some linear function, say . We want to find the that gives the best approximation and reduces the quantity .

We consider as a function of coordinates of and try to minimise it. It is easy to see that for the minima, we have . These equations written one below other give rise to . Taking transpose on both sides, we get .

This method is rather computation heavy. In practice, we set up an iteration of the form : that decreases in every iteration.

linear mapping

A homomorphism (function distributive over group operation (addition here)), say, , between vector spaces is called a linear mapping if it meets this extra criterion : .

Because of the linearity, we can represent such a function as a matrix.

Suppose is a linear map with expressed using the basis and , then can be expressed by a matrix with the column vector given by the coordinates of as expressed using C .

Note that any element of isn’t simply a list of coordinates but a full physical vector that requires a basis to be expressed as coordinates in.

Rank and nullity

Since a linear map is a homomorphism, according to the first isomorphism theorem, we have and thus , which tells us

Define as the rank of the matrix that represents in some basis and define as the nullity (The kernel is also called the null space, since it gets mapped to 0). It’s easy to see that the rank is number of linearly independent column vectors of and thus is also called the column space of , which is generated by these linearly independent columns. And since is basically the number of columns in , the nullity is the the number of remaining columns.

Column rank and row rank are the same.

Define column rank to the number of independent columns and row rank to be number of independent rows for any matrix.

Since elementary row operations do not change the column rank or row rank (poof left as exercise), thus after Guassian elimination, these quantities will still be same as of the original matrix. And for a reduced row echolon form, both the column and row ranks are just the number of non zero entries in the marix, and thus they are the same.

Change of basis

If a vector space is expressed using two diffent basis and .

Then to go from representation of a vector using to one using , we have to simply multiply by a matrix with column vectors being expressed using .

Suppose the matrices have column vectors as expressed using a basis , then the matrix for changing basis from to is given by .

Now, consider a linear map given by the matrix when expressed using basis for and the same map can also be given by the matrix when expressed using basis for respectively. Suppose is the matrix that changes the basis from to and changes from to . Then,

Expressing everything in some universal frame, we can write :

(Note : Read right to left please)