Models as Graphs

Every node is a random variable and every edge shows a dependency, which combined for a certain node can be expressed as probability distribution functions.

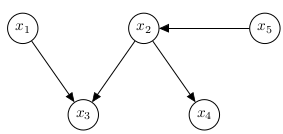

For example, in a graph like this :

Since in the graph depends only on , we have a way to determine the distribution without requiring to know the values or probability distributions of any other variables . If we know the probability distribution for the nodes that don’t have any arrows pointing in, then we basically know the actual (not conditional) probability distribution of every variable. These “grandparent” nodes’ distribution is usually either assumed , or approximated empirically.

Bayesian update

Notice that when some of the random variables are known, we can directly update the probability distributions of the children nodes and their children, and so on. But we can also update the parent nodes via Baeysian inference, and thus, also the sibling nodes. What we can’t (or don’t need to) update are the “co-parent” nodes, that is nodes that are also parents of the children of our known nodes. For example it graph above, if is known, then we know the pdf of and we can update the pdf of . We now also know the pdf of , but we have used the old pdf of to figure it out. That old pdf of has not changed, because althought the pdf of was altered, we did so using our prior beliefs about and using Baeysian inference again to update the pdf of will do nothing but echo that belief.

Conditionally independent nodes

Nodes that are independent, provided the values of some other nodes , are said to be conditionally independent under the set of nodes . We write this as . A way to determine this is by forgetting that are random variables and seeing them as arbatory constants in the distribution functions of their parent nodes, and thus enabling us to delete these nodes completely . Now, in this scenario, if have to be independent, then there should be no effect of node on , directly, or indirectly. That happens when for every path that goes from to , in the original graph, there is some roadblock after deletion of nodes, either because the path contained a node from , and now it’s incomplete (note that such a node shouldn’t have both the arrows connected to it in the path, pointing towards it, because then, this node, while being deleted would have formed a connection between its parent nodes and thus information can still be transmitted through this “broken” path), or because it contains a node, not in with the arrows attached to it from the path, both pointing in, making and the grandparent nodes. We know that knowing one grandparent node doesn’t cause us to update our beliefs about the other.